So speaking of AI I used my current favourite Chatbot (Gemini 3 Flash) to research deeper into vocal isolation models. I had been using Demucs then Demucs FT (Fine Tuned) for a while but seeing as the space is active and busy I was interested to know what else was going on.

Let's back up.

If you are recording a live sound, which I do quite regularly at my local church, then having the sound of all the people singing in your mix is an ABSOLUTE GAMECHANGER. I also used crowd mics for recording my daughter's band concert - basically I will want to use ambience/crowd microphones for anything live. It's how the resulting mix sounds like you are there, part of it, with the crowd, rather than a studio performance.

Here's the problem - the crowd microphones are going to pick up the PA, of course. So step 1 is to use the right microphones and have them in the right place. I was lucky that someone had previously set up a pair of Samson C02 condenser mics at my local church and they kicked my inspiration into overdrive for adding them into a mix. What I found however was they were very low on the stage and pointed forward and would pick up what was in front of them rather than the "wider bigger vibe". We moved them up onto stands which helped a lot - they picked up a wider sound of the people but the PA house sound was still very loud in them. I have since experimented with location and different mics, which is ongoing hence this is Part 1 of an ongoing experiment.

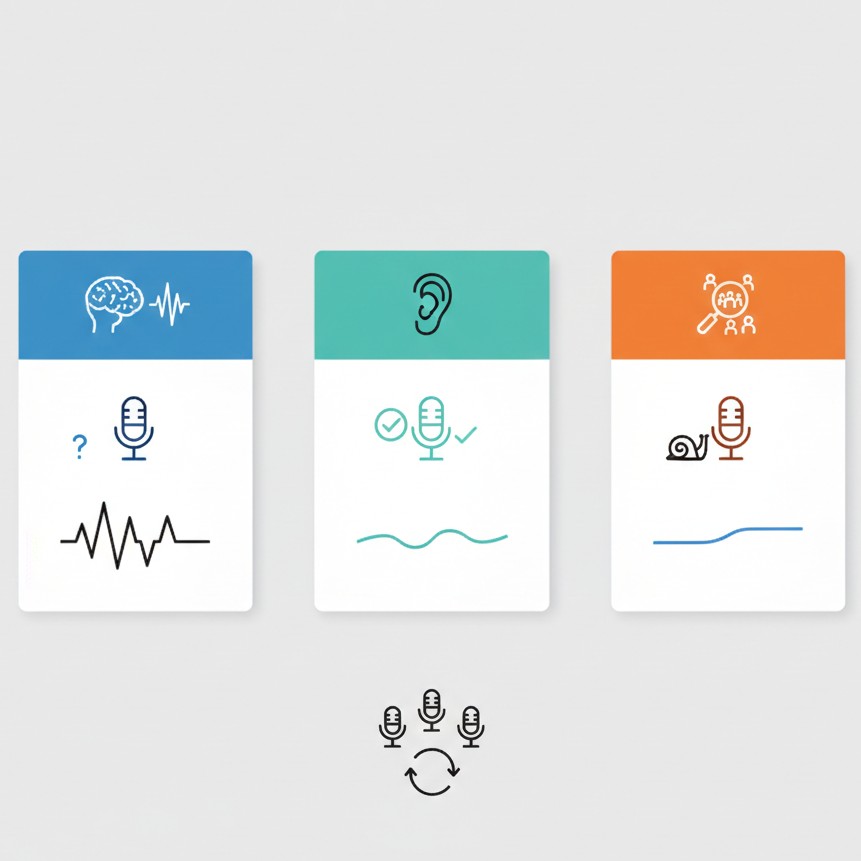

One way of reducing the house PA sound is to use the right sort of mic in the right sort of placement and use EQ/etc to draw out the people's voices...but it's the 2020's and we have software models for everything. The application of music isolation blew my mind when models overtook the algorithmic systems (thanks for your persistence Steinberg, it was a good effort but it was the wrong approach). So if I run an ambience mic through a model to separate just Voices and Not Voices I get this wonderful ambient I can mix back in.

"Remind me why you can't just use the raw audio with some EQ and effects?"

As you turn up the crowd mics, even with a sculptured signal, the drums/bass/instruments in the recording starts to overpower the actual recordings of the drum/bass/instruments. You will start getting that phasey reverby roomy echo which sounds more like mud than like the crowd. A little is okay, too much is meh. So if you really want the people big in the mix, isolate out the instruments.

The Chatbot talked to me about other models to try beyond HTDemucs FT (developed by Meta (Facebook) trained on a massive set of internal data). We have the MelBand Roformer (developed by ByteDance (TikTok) and Kimberley Jensen) and community expansions of it with extra data of messy real world audio.

I discovered that for some of my recordings The MelBand Roformers were a lot better! But it is not a one-size-fits all unfortunately - if you really want to go to town you would run all the models and mix them together.

Okay, let's have a look/listen at an example - here is a recording straight from the crowd mics:

For reference here is what the microphones being held by the vocalists sounds like, mixed:

Notice that there is some instrument present, especially drums. They are dynamic mics and the vocalists are holding them close to their mouths so it's not getting much drums/instruments, but it is there. But also notice they are clear and crisp. That should be the predominant sound in the mix - not the mushy crowd mic version - but we want that underlying crowd sound as well!

I'm not going to mess with EQ and effects for this example - although that would make it a sound better/balanced - for the purpose of this discussion let's just listen to what the isolators do. To initially blow your mind like it did mine, here is just the instruments (for reference this was done with MelBand Roformer InstVox Duality V2 model).

Righto - let's first listen to HTDemucs FT. You can run this yourself - make sure you have python installed and execute:

demucs -n htdemucs_ft "test_raw.wav" --two-stems=vocalsOf course it's not perfect! But wow it is very very clever. The main vocals are lound in there - coming back through the house PA quite strongly - which is to be expected - but the crowd is underneath. Not as much as I want - but save that for Part 2 - different mics and different placement to better pick up the people and not the house PA.

Now for MelBand Roformer Big Beta (community)

audio-separator "test_raw.wav" --model_filename "melband_roformer_big_beta5e.ckpt" --mdxc_segment_size 256 --mdxc_overlap 2 --mdxc_batch_size 1 --use_autocast --output_format=WAVCode tips: ask your favourite Chatbot. Some of these parameters are tuned for my laptop GPU which is not very powerful.

Notice it dropped off a little towards the end, and it grabbed a bit more bass towards the start - I think that was more the bass guitar then vox. Not bad but I don't think it beat HTDemucs.

MelBand Roformer original Kim version

audio-separator "test_raw.wav" --model_filename "vocals_mel_band_roformer.ckpt" --mdxc_segment_size 256 --mdxc_overlap 2 --use_autocast --output_format=WAVSimilar, handled the end a bit better, it picked up more nuance with the singing, for example the word "Surely" at the start has been captured better in this model than the other two.

MelBand Roformer Duality

audio-separator "test_raw.wav" --model_filename "melband_roformer_instvox_duality_v2.ckpt" --mdxc_segment_size 256 --mdxc_overlap 4 --use_autocast --output_format=WAVSimilar again, seemed to be slightly more gatey - decided the gap between phrases was silence, which is technically correct. Didn't grab quite as much bass as the other two.

Look I could go with any of them, in this test snippet I reckon the winner is HTDemucs FT or MBR Duality.

As a final closeout, this is what a people-less mix sounds like:

Notice there was an electric guitar being played, but the house had it down so low it was barely present in the crowd mics! But because I had all the channels recorded, I turned him up, because it sounded so good. So finally, with people mixed in, the point of all of this:

It brings out the "liveness" of the room. Because there was still a lot of main vocalists in the mix they come through a bit echo-ey, but the fact the people are singing underneath really turns the mix around.

There you have it! Using the power of AI to isolate vocals - but it's only as good as the original mic signals and I have room to make them better. Watch this space!

No comments:

Post a Comment